Generative Pre-trained Transformers (GPT) have taken natural language processing (NLP) to new heights. OpenAI has been a front-runner in GPT development, culminating in the recent release of their latest model, GPT-4. This multimodal model can accept text and image inputs and produce text outputs. In this article, we delve into the history of GPT models and analyze the advanced capabilities of GPT-4.

Understanding GPT Models

GPT models are deep learning models used for generating human-like text. These models have endless applications, including summarizing text, generating code, translating the text into other languages, and even generating blog posts and stories. In addition, they have proven to be cost-effective and time-saving, particularly when fine-tuned on specific data to produce even better results.

Before the transformer models, GPT models included recursive neural networks and long short-term memory neural networks, which could only output short phrases and single words. However, Google’s Bidirectional Encoder Representations from Transformers (BERT) introduced the transformer architecture in 2017, revolutionizing NLP. BERT, though, could not generate text from a prompt.

GPT-1, GPT-2, and GPT-3

OpenAI developed its first language model, GPT-1, in 2018, a proof-of-concept not released publicly. The following year, they published a paper on their latest model, GPT-2, which was released to the machine learning community. GPT-2 was the state-of-the-art model in 2019, though it could only generate a few sentences before breaking down.

GPT-3, with 100 times more parameters than GPT-2, was released in 2020, with performance improvements resulting from training on an even larger text dataset. GPT-3 surprised the world with its ability to generate human-like text and even became the fastest-growing web application ever, with 100 million users in two months. OpenAI continued to improve GPT-3 with various iterations known as the GPT-3.5 series, including the conversation-focused ChatGPT.

GPT-4 Advancements

GPT-4, the latest release by OpenAI, is designed to enhance model alignment, generating less offensive or dangerous outputs and improving factual correctness. In addition, it has better steering ability, allowing users to change its behavior according to requests, such as writing in different tones, voices, or styles. GPT-4 has also shown a higher performance benchmark than GPT-3.5, with 40% fewer factual and reasoning errors.

Related reading: 6 Powerful Steps for GPT App Development

GPT-4 can also accept image and text inputs (in research preview only). Users can enter interspersed text and images to specify any vision or language task. OpenAI has showcased examples highlighting GPT-4’s ability to interpret complex imagery such as charts, memes, and screenshots.

Outperforming Human-Level Performance on Professional and Academic Benchmarks

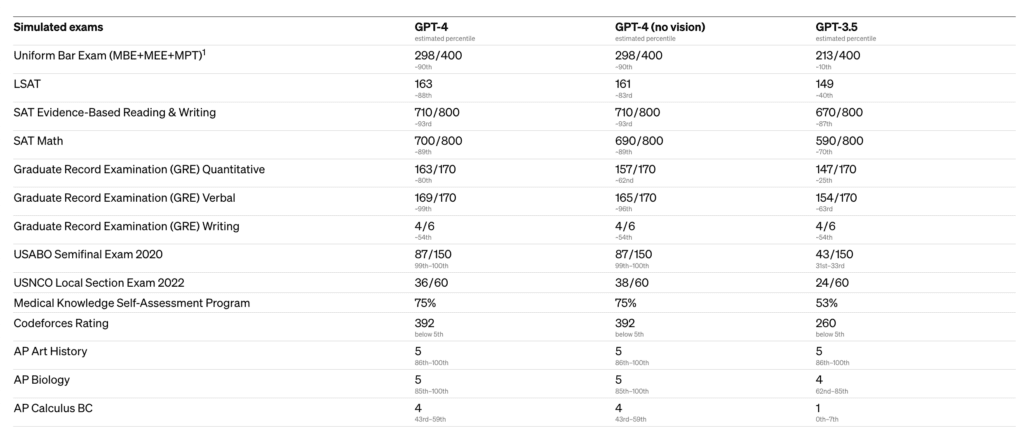

OpenAI has once again outdone itself with the development of GPT-4. The team at OpenAI evaluated GPT-4 by simulating exams designed for humans, including the Uniform Bar Examination, LSAT for lawyers, and the SAT for university admission. The astounding results showed that GPT-4 achieved human-level performance on these benchmarks.

Outperforming Existing Large Language Models

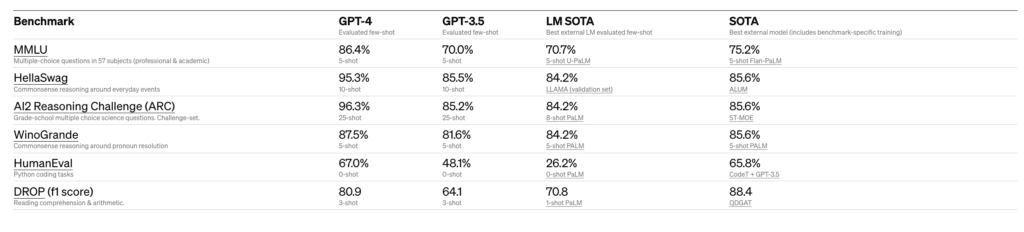

OpenAI also evaluated GPT-4 on traditional benchmarks designed for machine learning models, and the results were equally impressive. GPT-4 outperformed existing large language models and most state-of-the-art models that may include benchmark-specific crafting or additional training protocols. These benchmarks included multiple-choice questions in 57 subjects, commonsense reasoning around everyday events, grade-school multiple-choice science questions, and more.

Multilingual Capabilities

OpenAI tested GPT-4’s capability in other languages by translating the MMLU benchmark, a suite of 14,000 multiple-choice problems spanning 57 subjects, into various languages using Azure Translate. Again, the results were exceptional, with GPT-4 outperforming the English-language performance of GPT-3.5 and other large language models in 24 out of 26 languages tested.

Related reading: GPT-3 Fine-Tuning for Chatbot: How it Works

How to Access GPT-4

The good news is that OpenAI is releasing GPT -4’s text input capability via ChatGPT, and it is currently available to ChatGPT Plus users. However, there is a waitlist for the GPT-4 API, and public availability of the image input capability has yet to be announced.

OpenAI Evals: Guide Further Improvements

OpenAI also has open-sourced OpenAI Evals, a framework for automated evaluation of AI model performance. This allows anyone to report shortcomings in their models and guide further improvements. The open-sourcing of this framework is a significant step forward for developing AI models with increasingly advanced capabilities.